Introduction

Data has always been the foundation of decision-making, but for a long time, the way we managed it was broken. In my two decades of watching technologies rise and fall, I have seen brilliant data models fail simply because the “plumbing”—the data pipeline—was manual, slow, and full of errors. We used to treat data as a static library, but today, data is a high-speed river.DataOps is the discipline that finally brings order to this flow. It takes the best parts of DevOps—automation, testing, and collaboration—and applies them to data engineering. The DataOps Certified Professional (DOCP) program is the gold standard for anyone who wants to stop fighting with data and start delivering it with confidence.This master guide is for the engineers and managers in India and across the globe who are ready to move from “manual data work” to “automated data excellence.”

Choose Your Path: 6 Specialized Learning Tracks

Modern engineering is too broad for a one-size-fits-all approach. Depending on your interest, you should pick a path that aligns with your career goals. Here are the six pillars of the modern technical ecosystem:

- DevOps Path: The root of it all. This is for those who love building the pipelines that carry code from a developer’s laptop to the customer. It focuses on speed, CI/CD, and removing manual handoffs.

- DevSecOps Path: Security is no longer an afterthought. This path is for engineers who want to integrate security “gates” directly into the automated pipeline so that unsafe code never reaches the public.

- SRE (Site Reliability Engineering) Path: For those obsessed with stability. SREs use software engineering to solve operations problems, ensuring that systems stay up even when things go wrong.

- AIOps/MLOps Path: As AI becomes common, we need to manage models like software. This track teaches you how to automate the lifecycle of machine learning models.

- DataOps Path: This is the “Data Streamliner” path. It focuses on making sure data moves quickly and accurately from source to consumer without getting corrupted.

- FinOps Path: Cloud costs can spiral out of control. This path bridges the gap between finance and engineering to ensure every cloud dollar is spent wisely.

Role → Recommended Certifications Mapping

Not sure where to start? Match your current or target role to these recommended paths:

| Your Role | Recommended Certification Journey |

| Data Engineer | DOCP → MLOps Professional → Cloud Data Architect |

| DevOps Engineer | DevOps Professional → DOCP → DevSecOps |

| SRE | SRE Practitioner → DOCP → Observability Specialist |

| Platform Engineer | Kubernetes Specialist → DOCP → SRE Professional |

| Cloud Engineer | AWS/Azure/GCP Architect → FinOps Practitioner → DOCP |

| Security Engineer | DevSecOps Professional → DOCP (for Data Security) |

| FinOps Practitioner | FinOps Foundation → Cloud Cost Specialist → DOCP |

| Engineering Manager | DevOps Leader → FinOps for Managers → DOCP |

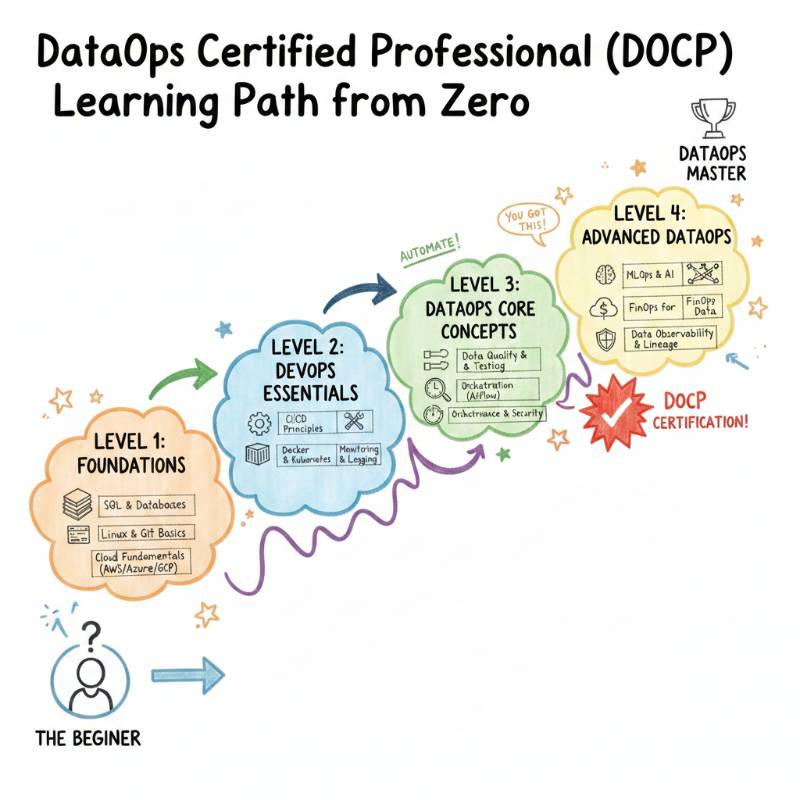

Deep Dive: DataOps Certified Professional (DOCP)

What it is

The DataOps Certified Professional (DOCP) is a specialized certification designed to teach the full lifecycle of automated data management. It focuses on how to apply Agile and DevOps principles to data pipelines to ensure high quality, speed, and reliability.

Who should take it

This program is built for Software Engineers who want to specialize in data platforms, Data Engineers who need better automation skills, and IT Managers who are overseeing data transformation projects. If you are tired of “data silos” and broken pipelines, this is for you.

Skills you’ll gain

- Automated Data Pipelines: Learning how to build “Data-as-Code” using CI/CD.

- Orchestration Mastery: Using tools like Apache Airflow to manage complex data workflows.

- Data Quality Testing: Implementing automated “circuit breakers” that stop bad data before it hits production.

- Containerization for Data: Running data workloads inside Docker and Kubernetes.

- Observability: Setting up monitoring for data drift, pipeline speed, and system health.

Real-world projects you should be able to do

- Create a fully automated ETL (Extract, Transform, Load) pipeline that triggers on new data arrivals.

- Build a monitoring dashboard that alerts the team when data quality falls below a certain threshold.

- Design a self-healing system that automatically retries failed data jobs without human intervention.

Preparation Plans for Success

Your time is valuable, so choose a plan that fits your current experience level:

- 7–14 Days (The Expert Sprint): Focus on the DataOps Manifesto and hands-on tool integration. This is for those already working in DevOps who just need to learn the “Data” nuances.

- 30 Days (The Professional Path): Spend 1 hour a day. The first two weeks should be for theory and principles. The last two weeks should be for building the real-world projects in the lab.

- 60 Days (The Deep Dive): Ideal for beginners or career switchers. Spend the first month mastering Linux and basic SQL, then focus the second month entirely on DataOps automation.

Common Mistakes to Avoid

- Focusing only on tools: DataOps is a culture of collaboration, not just a list of software like Jenkins or Airflow.

- Skipping Automated Testing: If you don’t test your data, you are just delivering “garbage” to your stakeholders faster.

- Ignoring the “Ops” side: Data scientists often forget that data needs to be monitored and maintained in production, not just built once.

Master Certification Table

| Track | Level | Who it’s for | Prerequisites | Skills covered | Recommended order |

| DataOps | Professional | Engineers & Managers | Basic SQL/Data | Pipeline Automation, Data Quality | Start with DOCP |

| DevOps | Foundation | All Tech Roles | Basic Linux | CI/CD, Git, Automation | Take before DOCP |

| SRE | Professional | Ops/SREs | DevOps Basics | Reliability, SLOs | Take after DOCP |

| DevSecOps | Professional | Security/Devs | DevOps Basics | Security as Code, SAST/DAST | Take after DOCP |

Training & Certification Support Institutions

Choosing the right partner for your learning journey is crucial. These institutions are recognized for their commitment to quality and hands-on expertise:

DevOpsSchool: This is the primary provider for the DOCP certification and sets the industry standard. They offer a comprehensive, instructor-led curriculum that is 70% lab-focused, ensuring you build real-world, automated pipelines. Their programs are mentored by seasoned experts who provide lifetime access to course materials and post-training career support.

Cotocus: A specialized consulting and training firm that excels in corporate technical transformations. They focus on delivering high-end workshops tailored for teams that need to implement DataOps at scale in complex cloud environments. Their training is highly practical, helping organizations bridge the gap between legacy data management and modern automated workflows.

Scmgalaxy: A veteran community-driven platform that provides a massive library of tutorials, documentation, and community support. It serves as an essential resource for troubleshooting and staying updated with the latest trends in configuration management and DataOps. They offer a unique blend of 250+ hours of self-paced videos and live sessions to suit all learning speeds.

BestDevOps: This institution is known for its result-oriented training programs designed to simplify complex technical concepts. They focus on providing a “Tools-First” approach, ensuring that engineers master the specific automation software required for daily operations. Their curriculum is built specifically for those who want to see immediate, practical benefits in their job performance.

devsecopsschool: A specialized portal focusing entirely on the “Security” pillar of modern engineering. They offer deep-dive modules into vulnerability management, data masking, and compliance as code. It is the perfect institution for professionals who want to ensure their data pipelines are not just fast, but also secure and compliant with global standards.

sreschool: Dedicated to Site Reliability Engineering, this school focuses on teaching the principles of system scalability and reliability. They help professionals understand how to build self-healing data systems and implement observability stacks. Their training is essential for those who want to maintain 99.9% uptime for their critical data platforms.

aiopsschool: This forward-looking school focuses on the intersection of AI and operations. They teach how to use predictive analytics and machine learning to monitor data pipeline health and detect anomalies before they cause downtime. It is an ideal stop for engineers looking to move into MLOps and automated incident response.

dataopsschool: This is the most specialized branch focusing exclusively on the end-to-end data lifecycle. They provide the deep-dive technical tracks needed to master data ingestion, transformation, and quality testing. Their training ensures that data engineers and scientists can collaborate seamlessly using a shared, automated framework.

finopsschool: This institute focuses on cloud financial management and cost optimization for data workloads. Since data processing in the cloud can be expensive, they teach you how to bring financial accountability to your engineering teams. You will learn how to balance the performance of your data pipelines with the reality of cloud budgets.

Next Certifications to Take

Once you earn your DOCP, consider these three directions for growth:

- Same Track (Specialization): MLOps Certified Professional. This is the logical next step to learn how to manage the specialized data needs of AI and Machine Learning models.

- Cross-Track (Broadening): SRE Certified Professional. Learning how to keep your data systems highly available and reliable is a “superpower” in the modern enterprise.

- Leadership (Management): Certified DevOps Manager. If you want to lead teams and design the high-level strategy for an entire organization, this is your path.

Frequently Asked Questions (FAQs)

1. How difficult is the DOCP certification?

The DOCP is a professional-level certification. It is moderately challenging because it requires you to understand both data logic and automation pipelines. It is not just about memorizing facts; it is about solving problems.

2. What are the main prerequisites?

While anyone can enroll, having a basic understanding of SQL and the command line (Linux) will help you move much faster through the curriculum.

3. Is this certification recognized in India?

Yes, India has one of the largest data markets in the world. Companies like TCS, Wipro, and high-growth startups value the DOCP as it proves you can handle modern, automated data environments.

4. How much time do I need to study?

Most working professionals find that 30 days of consistent study (about 5-7 hours a week) is the perfect amount of time to prepare for the assessment.

5. Does DOCP cover specific cloud providers?

The principles of DOCP apply to all clouds (AWS, Azure, GCP). The training usually shows you how to implement these ideas using tools that work across any environment.

6. Is there a specific sequence I should follow for certifications?

I usually recommend taking a basic DevOps course first to understand pipelines, then specializing with the DOCP to master data-specific automation.

7. Can an Engineering Manager benefit from DOCP?

Absolutely. Managers need to understand the “DataOps mindset” to properly staff their teams and set realistic goals for data delivery and quality.

8. What is the value of this certification globally?

DataOps is a global trend. Whether you are in Europe, the US, or Asia, the need for reliable data pipelines is universal. This certification is recognized by practitioners worldwide.

9. What tools will I learn during the training?

You will typically work with tools like Git for version control, Jenkins or GitLab for CI/CD, and Apache Airflow for orchestration.

10. How long is the certification valid?

Like most technical credentials, it is recommended to refresh your knowledge every 2-3 years as the technology stack evolves.

11. Does this certification help with career growth?

Yes. DataOps is currently one of the highest-paying niches in data engineering because very few people know how to combine “Data” with “Ops.”

12. Is the exam hands-on or theoretical?

The assessment is designed to test your practical knowledge. You should expect questions that require you to apply DataOps principles to real-world scenarios.

It sounds like you want to swap out the existing FAQ questions with a fresh set that still covers the core aspects of the DataOps Certified Professional (DOCP) program.

FAQs on DataOps Certified Professional (DOCP)

- Is DataOps just DevOps for Data, or is there more to it?

While it borrows heavily from DevOps—like CI/CD and automation—DataOps is unique because it must handle “Data Quality” and “Logic” simultaneously. In software, code is versioned; in DataOps, we have to version the code and account for the ever-changing nature of the data itself. This certification clarifies those specific nuances. - How does the DOCP certification address “Data Silos”?

A major focus of the DOCP is the cultural shift. It teaches you how to build a “Data Hub” environment where engineers, scientists, and business users work on a unified pipeline. It provides the technical framework to break down silos by automating the hand-offs between teams. - Will this certification teach me how to handle “Bad Data” in production?

Yes. One of the core pillars of the DOCP is automated testing for data. You will learn how to implement “Data Quality Gates” that automatically catch and quarantine “dirty data” before it ever reaches your dashboard or ML model, saving hours of manual cleanup. - How much “coding” knowledge is required for the DOCP?

You don’t need to be a software developer, but you should be comfortable with SQL and have a basic understanding of Python or Shell scripting. The focus is on orchestration—writing the logic that moves and validates data—rather than building complex applications from scratch. - What is the ROI for a company that gets its engineers DOCP certified?

The Return on Investment is usually seen in “Cycle Time.” Companies with certified DataOps professionals typically see a massive reduction in the time it takes to move a data idea from a request to a live report, often moving from weeks to hours. - Does the DOCP cover cloud-specific data tools like Snowflake or BigQuery?

The certification teaches you the architecture that makes these tools work. Whether you use Snowflake, Redshift, or BigQuery, the DataOps principles of versioning, testing, and monitoring remain the same. It prepares you to manage a modern data stack regardless of the specific vendor. - How does the DOCP prepare me for the “Observability” aspect of data?

Modern data environments are complex. The DOCP curriculum includes training on “Data Observability”—the ability to track the health, lineage, and performance of data throughout its lifecycle. This ensures you can fix issues before the business even notices them. - Can I take this certification if I am currently a Database Administrator (DBA)?

Actually, DBAs are some of the most successful candidates. The DOCP helps a traditional DBA transition into a “DataOps Engineer” role by teaching them how to apply automation and cloud-native principles to the data management skills they already possess.

Testimonials

“I spent years manually fixing broken data loads. After completing the DOCP, I implemented an automated testing framework that caught 90% of our errors before they reached the warehouse. It changed my career.”

— Rohan S., Data Engineer

“As a manager, I needed a way to bridge the gap between my data scientists and my IT team. The DOCP gave us the common language and process we needed to stop the finger-pointing and start delivering.”

— Ananya K., Engineering Manager

“The hands-on labs at DevOpsSchool were incredible. I didn’t just learn about Airflow; I actually built a pipeline that scaled. This is the most practical course I’ve ever taken.”

— Vikram J., Platform Engineer

Conclusion

The days of manual data management are gone. To compete today, you need to be fast, reliable, and automated. The DataOps Certified Professional (DOCP) is the roadmap that will get you there. It isn’t just a badge on your resume; it is a fundamental shift in how you work with technology.I have seen many trends come and go, but the need for high-quality data is permanent. I invite you to take this step and lead the change in your organization.