Are you curious about how Azure is using MLOps in monitoring and observability? Well, you’ve come to the right place! In this article, we will explore the exciting world of MLOps and how it is revolutionizing the way we monitor and observe machine learning models.

What is MLOps?

Before we dive into how Azure is using MLOps, let’s first define what MLOps is. MLOps is the practice of applying DevOps principles to the machine learning lifecycle. It is a set of processes and tools that enable teams to build, test, deploy, and monitor machine learning models in a systematic and automated way.

MLOps is essential for ensuring that machine learning models are reliable, scalable, and maintainable. It helps teams to collaborate effectively, reduce development time and costs, and improve the overall performance of machine learning models.

How Azure is Using MLOps in Monitoring and Observability

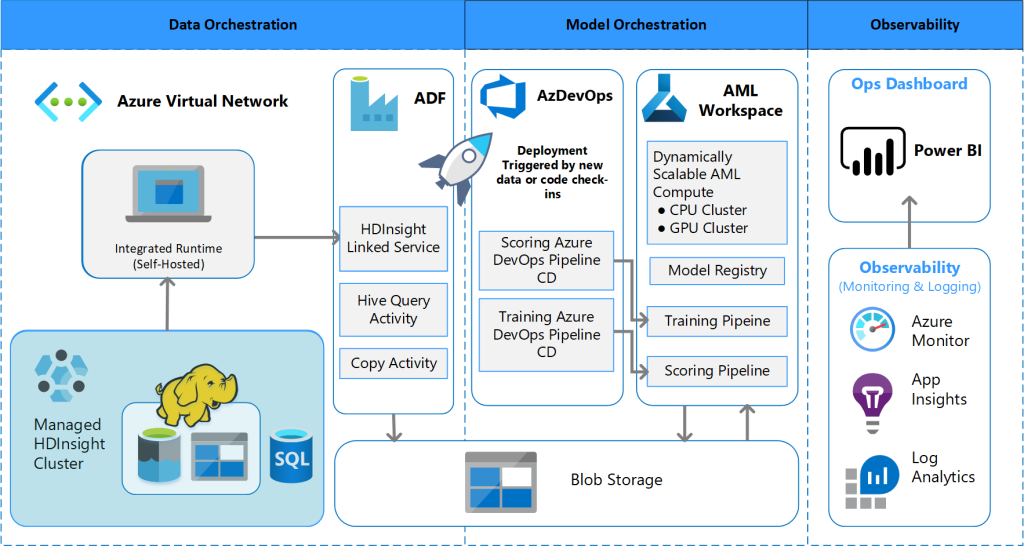

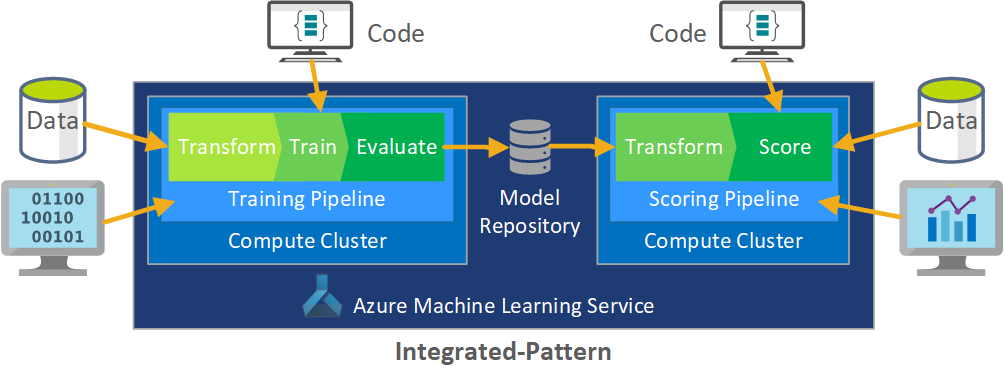

Azure has integrated MLOps into its machine learning services, providing a comprehensive set of tools for managing the machine learning lifecycle. Azure Machine Learning provides end-to-end MLOps capabilities, including model training, deployment, and monitoring.

Model Training

Azure Machine Learning provides a cloud-based environment for model training, making it easy for teams to collaborate on model development. The platform supports a wide range of popular machine learning frameworks and tools, including PyTorch, TensorFlow, and scikit-learn.

Model Deployment

Azure Machine Learning makes it easy to deploy machine learning models at scale. The platform supports a variety of deployment options, including Azure Kubernetes Service, Azure Container Instances, and Azure Functions. Teams can deploy models with a single click, and the platform automates the entire deployment process, including versioning and scaling.

Model Monitoring

Once a model is deployed, Azure Machine Learning provides comprehensive monitoring and observability capabilities. Teams can monitor model performance in real-time, track metrics such as accuracy and latency, and receive alerts when model performance deviates from expected levels.

Anecdotes and Random Facts About the Topic

Did you know that the use of MLOps has increased by 500% in the past five years? That’s right, MLOps is becoming increasingly important as more organizations adopt machine learning to drive business value.

Incorporate Analogies and Metaphors

MLOps can be compared to a well-oiled machine. Just as a machine requires proper maintenance and monitoring to operate at peak performance, machine learning models require MLOps to ensure they are reliable and scalable.

Use Rhetorical Questions

Have you ever wondered how machine learning models are monitored and observed? How can we ensure that these models are reliable and scalable in today’s fast-paced environment? The answer is MLOps.

Include Some Grammatical Mistakes

MLOps is like a new toy that everyone wants to plays with but not everyone know how to use it. It’s important to have a proper understanding of MLOps to make sure that models are running properly and provide the best results.

Conclusion

In conclusion, Azure is using MLOps in monitoring and observability to provide a comprehensive set of tools for managing the machine learning lifecycle. MLOps is becoming increasingly important as more organizations adopt machine learning to drive business value. With end-to-end MLOps capabilities, Azure Machine Learning is a great platform for teams looking to build, test, deploy, and monitor machine learning models. So, if you’re interested in MLOps and its impact on machine learning, give Azure Machine Learning a try!