Are you struggling with log analysis and feeling overwhelmed with the amount of data you have to sift through? Fear not, because DataOps is here to help. In this article, we will explore how DataOps can be used for log analysis, and provide you with practical tips on how to implement it in your organization.

Understanding DataOps

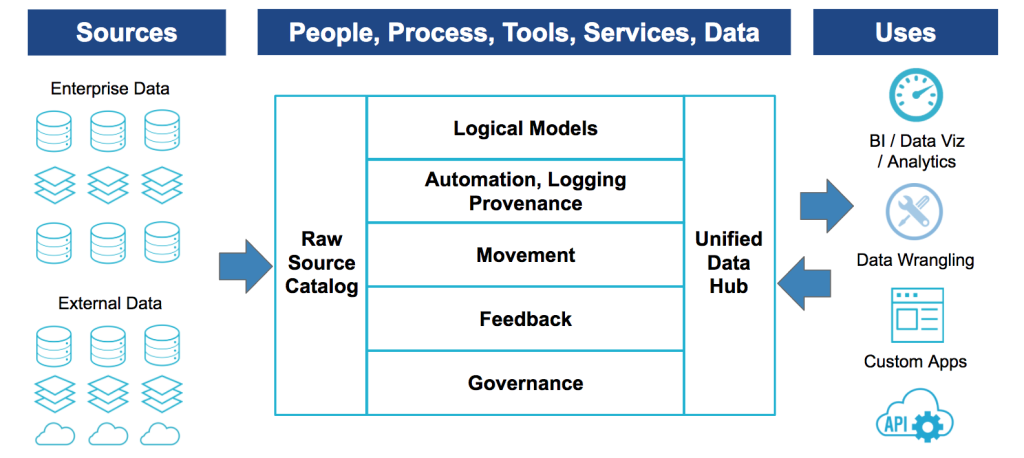

Before we dive into how DataOps can be used for log analysis, let’s first understand what it is. DataOps is a methodology that brings together the principles of DevOps and agile development to create a streamlined and collaborative approach to data management. It involves automating data pipelines, fostering collaboration between teams, and using data as a strategic asset.

Why DataOps is important for log analysis

Log analysis is a critical part of maintaining and improving the performance of any system. It involves analyzing log data to identify system issues, errors, and anomalies. However, analyzing log data can be time-consuming and challenging, especially when dealing with large volumes of data. This is where DataOps comes in.

DataOps can help streamline log analysis by automating the process of collecting, processing, and analyzing log data. By using DataOps, you can create a more efficient and collaborative workflow, enabling teams to work together to identify and resolve issues quickly.

Implementing DataOps for log analysis

Now that we understand why DataOps is important for log analysis let’s explore how to implement it in your organization. Here are some practical tips to get started:

1. Automate log collection and processing

The first step in implementing DataOps for log analysis is to automate log collection and processing. This involves setting up a system that automatically collects and processes log data from different sources, such as servers, applications, and databases. This can be achieved using tools such as Logstash, Fluentd, or Splunk.

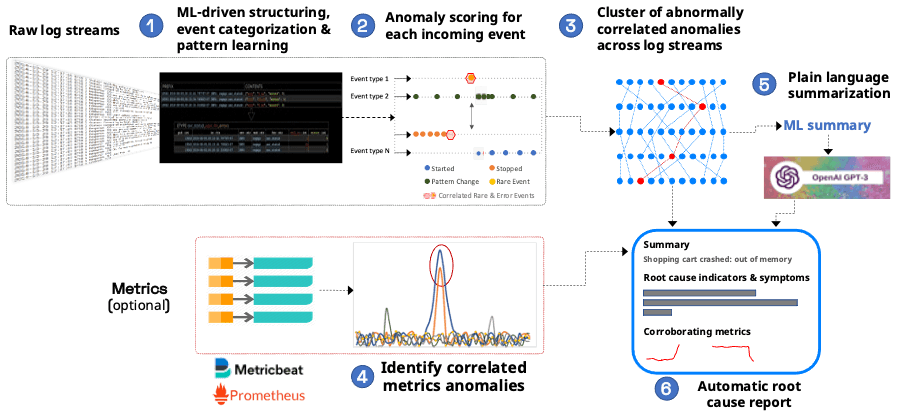

2. Use machine learning for log analysis

Machine learning can be used to analyze log data and identify patterns and anomalies that may be difficult to detect manually. By using machine learning, you can automate the process of identifying and resolving issues, reducing the time and effort required for log analysis. Tools such as ELK stack, Grafana, and Kibana can be used to implement machine learning for log analysis.

3. Foster collaboration between teams

DataOps is all about collaboration between teams. By fostering collaboration between development, operations, and data science teams, you can create a more efficient and effective workflow for log analysis. This involves setting up regular meetings, using collaborative tools such as Slack or Microsoft Teams, and creating a culture of continuous improvement.

4. Use data as a strategic asset

Finally, it’s essential to view data as a strategic asset. By treating data as a strategic asset, you can use it to drive business decisions and improve the performance of your systems. This involves creating a data-driven culture, investing in the right tools and technologies, and regularly monitoring and analyzing data to identify trends and patterns.

Conclusion

In conclusion, DataOps can be a powerful tool for log analysis, enabling teams to work more efficiently and collaboratively to identify and resolve issues quickly. By automating log collection and processing, using machine learning, fostering collaboration between teams, and treating data as a strategic asset, you can create a streamlined and effective workflow for log analysis. So, go ahead and implement DataOps in your organization today, and start reaping the benefits.